Developing a platform for AI for assistive autonomy

2 October 2024

Prof Subramanian Ramamoorthy leads the Edinburgh Autonomous Vehicle Initiative, which is developing a platform for AI for assistive autonomy. Here he and Dr Alejandro Bordallo Micó write about their work and how the Edinburgh International Data Facility supports it.

Emerging technologies for advanced driver assistance systems (ADAS) and autonomous driving (AD) are poised to have a transformative effect on how we experience mobility. Alongside electrification and software defined vehicles, ADAS/AD represent one of the most significant transformative trends within the automotive sector. For these systems to be safely deployed in urban environments it is necessary to have good models of the behaviour of vulnerable road users and other occupants of the roads to inform policies for human-vehicle interactions.

The newly formed Edinburgh Autonomous Vehicle Initiative seeks to bring together wide-ranging expertise within the School of Informatics and the University of Edinburgh to develop a platform for AI for Assistive Autonomy.

This initiative was created with the donation of a vehicle platform by Five AI (a Bosch GmbH company), with whom we have had a long-standing association.

Image above: our AV is a Ford Mondeo with modifications in the form of a sensor-suite top hat, lidar side mounts on the A pillars and a compute server in the boot. The AV has 16 camera mounts, 3 lidar and 8 radar sensors, a Global Navigation Satellite System (GNSS) receiver, Inertial Measurement Unit (IMU) sensors and a drive-by-wire kit which enables vehicle control via software running on the server. When operating with all sensor feeds, data throughput can require bandwidths as high as 10Gb/s.

The Edinburgh AV dataset

The first step within this initiative is to collect a novel dataset that includes both perception and action data collected in the city of Edinburgh, representing some unique urban environments. Many previously available datasets focus primarily on perception data, aimed at mapping and localisation research. In contrast we are collecting and curating paired data on what the vehicle perceives, using a range of sensors including cameras, lidar and possibly radar, and what the driver did in response to the activities in the vicinity of the car.

This will enable the learning of new behaviour models, and in the longer-term the creation of interaction policies for safe autonomous driving in such environments. While some of the autonomous vehicle driving technology might take some time to become commonly available, this dataset will also enable the development of new forms of driver assist technologies.

Data and storage infrastructure

The cooperation with EPCC is aimed at building the data and storage infrastructure for this new dataset, and in the longer term also annotation and model training pipelines associated with this dataset.

As part of data collection, the Edinburgh International Data Facility (EIDF) Data Science Cloud capabilities enable the offloading and pre-processing of the terabytes of data that an AV sensor suite produces. This is key in building a curated and accessible dataset for the research and development of self-driving and related technologies. Data collection in Edinburgh is expected to start in late 2024 and will be made available through the EIDF data publishing service once ready.

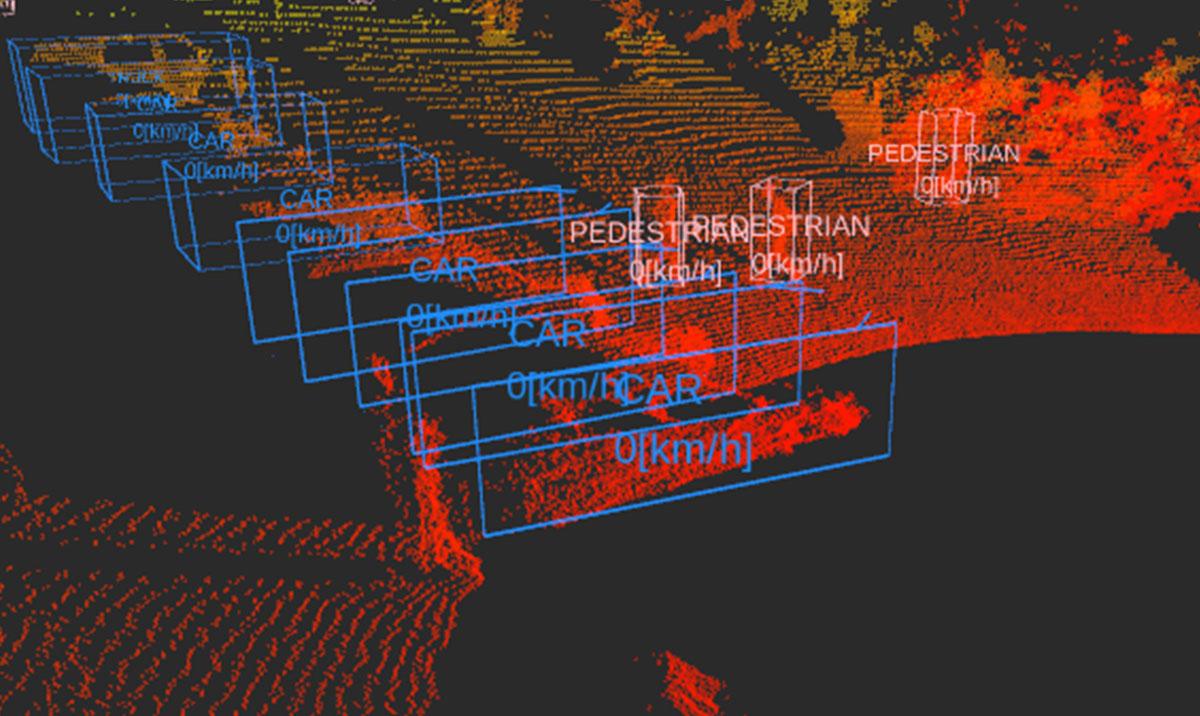

Image above: testing perception capabilities at King’s Buildings, Edinburgh (camera 2D detections on the left and lidar 3D detections on the right). Our dataset will consist of raw sensor outputs, calibrated and configured ready for processing by software algorithms. A classic task in autonomous driving is to detect agents in the scene (such as vehicles and pedestrians). Our dataset will be a valuable resource for this type of research.

Image above: a view of Edinburgh's Grassmarket area coming from West Port, between Edinburgh Castle and Flodden Wall. The dataset will contain high-definition data intended for research and development of autonomous driving technologies. The same data may also serve other related purposes, eg creating a digital map of Edinburgh’s infrastructure and buildings.

Further information

The team

This initiative is led by Prof Subramanian Ramamoorthy, Personal Chair of Robot Learning and Autonomy within the School of Informatics and Director of the Institute of Perception, Action and Behaviour. The core engineering team involved in the creation of the dataset include Dr Alejandro Bordallo Micó, a Senior Research Engineer, Mr Hector Cruz Gonzalez, Research Software Engineer and Mr Jim Duffin, Field Vehicle Engineer. This work also benefits from alignment with PhD student research, eg Ms Servinar Cheema’s project which is focused on new models of pedestrian-vehicle interactive decision making.

Links

Robust Autonomy and Decisions Group, School of Informatics, University of Edinburgh

Research profile of Prof Subramanian Ramamoorthy

Article in The Scotsman newspaper: What self-driving cars should be used for now - and it's not the rich, declares Edinburgh University AI expert

Read more about the Edinburgh International Data Facility's suite of services to support collaborative data science: Edinburgh International Data Facility: open for public data

Edinburgh International Data Facility

Authors

Prof Subramanian Ramamoorthy: s.ramamoorthy@ed.ac.uk

Dr Alejandro Bordallo Micó: alex.bordallo@ed.ac.uk