ePython: supporting Python on many core co-processors

10 November 2016

Supercomputing, the biggest conference in our calendar, is on next week and one of the activities I am doing is presenting a paper at the workshop on Python for High-Performance and Scientific Computing

This paper is entitled “ePython: An implementation of Python for the many-core Epiphany coprocessor” and discusses the Python interpreter & runtime I have developed for low memory many core architectures such as the Epiphany.

Some background...

To show why a new interpreter was needed, let me explain some background. A couple of years ago Adapteva

released a co-processor called the Epiphany, the most common form in circulation is the Epiphany III which contains 16 RISC cores and delivers 32 GFLOP/s. Each core has 32KB of SRAM and not only is this architecture very low power (16 GFLOPs per Watt) but the entire eco-system – from the hardware to the software – is all freely available and open source. To enable people to play with this technology, Adapteva developed the Parallella

, which combines an Epiphany III with a dual core ARM host processor and 1GB main board RAM (32MB of which is shared between the Epiphany and ARM.) At under $100 this is a very cheap way of gaining a reasonable number of cores and being able to experiment with writing parallel codes.

Over 10,000 Parallellas have been shipped and EPCC is a member of the Parallella University Program

. The idea behind this is “supercomputing for everybody”, however there is a problem… Namely that it is difficult, especially for novices, to write parallel codes to run on the Epiphany. There are a host of reasons why this is the case, from having to write code in C, to different structure alignments between the host and co-processor, no direct IO on the Epiphany, to the Epiphany requiring that all pointers align at specific memory boundaries (in the case of integers and floats these must be aligned on 4 byte word boundaries). This last one is my personal favourite, as if you get it wrong when de-referencing a pointer then the core simply hangs until it is reset. There is no hardware caching and it is very expensive for the Epiphany to access shared memory – so ideally you want the entirety of your code and data to reside in the 32KB on chip memory, which rules out libc!

Based on all of this I thought that Python could really help, and wouldn’t it be great if someone could go from “zero to hero” (ie with no prior experience of writing parallel code, get something running on the Epiphany cores) in less than one minute.

Memory limit problems

The high level nature of Python and people’s general familiarity with it are great – but the problem is the 32KB memory limit! Common Python interpreters, such as CPython, are many megabytes and even “micro” versions of Python (such as MicroPython

) are hundreds of KBs. It is actually worse than this because in fact we have less than 32KB because there still needs to be room for the user’s byte code and program data. Therefore I set the memory limit of the core’s interpreter and runtime at 24KB, which then leaves 8KB for everything else. This everything else (user’s byte code and data) can “flow over” into shared memory for larger Python codes, but there is a very significant performance cost here so it is to be avoided if possible.

ePython's approach

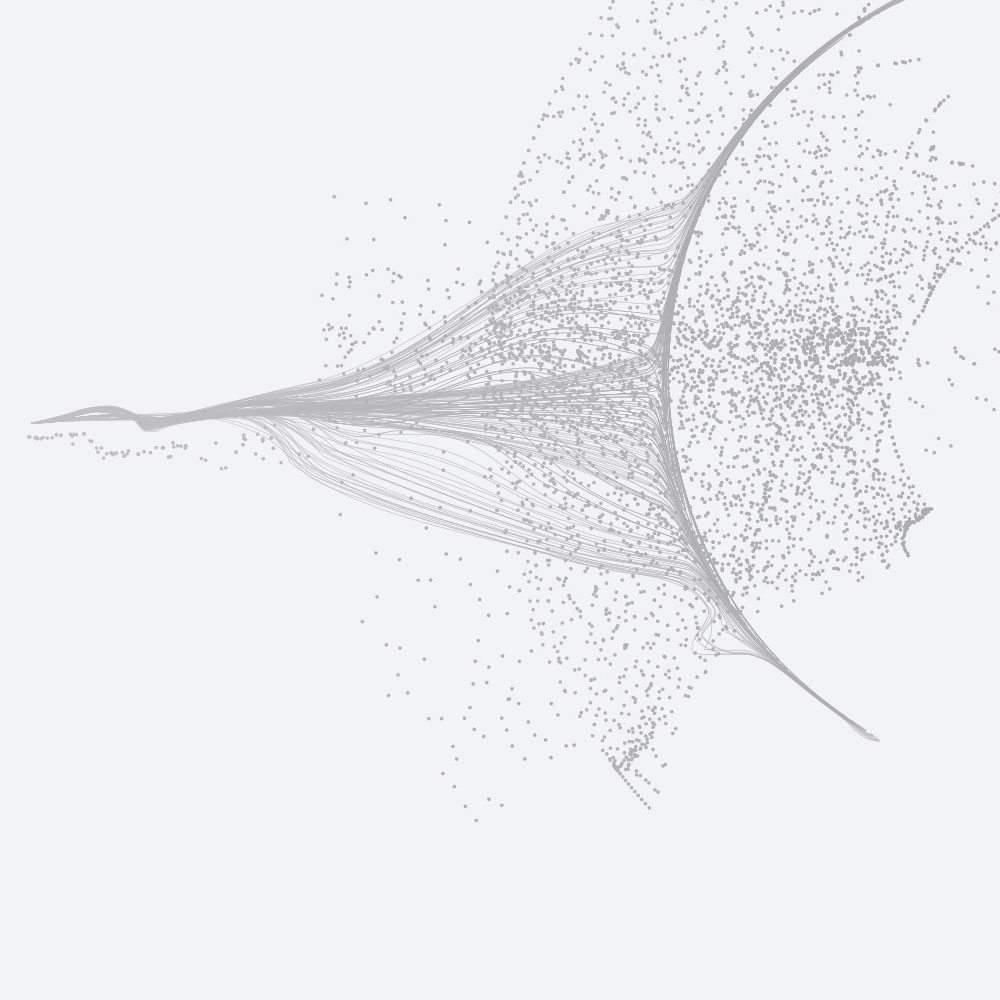

As illustrated in the diagram, ePython

uses the host ARM CPU for lexing, parsing, optimisation and byte code generation of the user’s Python code. This byte code (which I designed specifically to be as tight as possible) and some meta information such as the required size of the symbol table is then transferred over onto each Epiphany core. The interpreter and runtime (written in C) then runs on each Epiphany core, executing the byte code. ePython doesn’t support the entirety of the Python language (as that would be too large), but instead the imperative aspects of the language including functions (ie not the OO features.) I also try to put as much as possible into importable Python modules which are only included in user code and executed by ePython if the user requires this functionality. Whilst code is running on the Epiphany cores, the host ARM acts as a “monitor” and sits there polling for commands from cores. In this way ePython can instruct the host to perform specific actions that the Epiphany cannot (such as IO), this is entirely transparent to the user and it appears that the Epiphany itself is performing these actions.

There are a whole host of things supported in this 24KB running on the Epiphany cores, from memory management & garbage collection, to parallelism (sending P2P messages & collectives), to functions as first class values (which can be communicated themselves), to task farming, to multi-dimensional arrays and mathematics functionality. It is also possible to run additional “virtual cores” on the host and for these to appear like extra Epiphany cores for increased parallelism. The user can also also run “full fat” Python on the host (with an existing Python interpreter such as CPython) and for this code to interact with ePython code running on the Epiphany.

So what's the point?

I think the two major uses of ePython are in fast prototyping and education. Of course it is not as quick as hand crafted C code for the Epiphany, but it is far more accessible. I have posted a number of blog articles on the Parallella blog

about using ePython to explore the basics of parallelism and there are a number of parallel examples in the repository

covering common types of parallel codes – such as Jacobi, Gauss Seidel with SOR, Mandelbrot, master worker, parallel number sorting and generating PI via a Monte Carlo method.

Last month Adapteva announced their Epiphany V

, 1024 core, co-processor. With an estimated power efficiency of 75 GFLOPs per Watt this is a real many core behemoth that might go a long way to addressing some of the energy challenges of exascale. In the official release technical report

Adapteva note, because of this ePython work, that Python is supported and I think being able to quickly and simply write parallel Python codes to run on this 1024 core co-processor will be really useful for people.

Find out more

This blog article just scratches the surface of some of the challenges that this limited memory raises and techniques to solve them, along with the performance of parallel Python codes on the Epiphany. If you are interested then please come along to my talk, which is at 12 noon on Monday, November 14th in 155-C.

For more information about ePython you can visit the Github repository

and the paper will appear in the workshop proceedings published in the ACM Digital Library.