A shared compiler stack for stencil-based domain specific languages

5 July 2024

As the HPC community progresses further into the Exascale era, a key challenge is how to program our supercomputers. Even at the current scale of HPC, it is a major investment to write highly optimal distributed memory code that will efficiently leverage CPUs and GPUs.

One of the reasons for this complexity is that we most commonly use serial languages (such as Fortran or C) with libraries such as MPI and the programmer must consider tricky, low level details of parallelism. Since many of these programmers are scientists, who are most interested in using supercomputers as an instrument to progress their science, many of them do not have the time or expertise to fully exploit HPC for their codes.

Solving the programmability challenge

Domain Specific Languages (DSLs) are one solution to this programmability challenge. These raise the abstraction level so that the programmer can work with constructs that are closer to their problem and more familiar to them. However, crucially, the compiler then has a much richer source of information upon which it can operate and to drive key decisions around parallelism and architecture-specific optimisations.

DSLs are therefore effectively two things: the set of abstractions that are used by the programmer and the compiler to generate highly efficient, parallel, executables. The current state of the art is that DSL developers tend to write their own bespoke compiler and these are highly siloed, sharing very little or no infrastructure with other DSL compilers.

These bespoke compilers are a major problem, and arguably the reason why we have not yet seen more adoption of DSLs in HPC. First they require a (very) large investment of effort on behalf of the DSL developer to write, maintain and then tune. Secondly they can represent a significant amount of risk for the end user, who would tie their code to this DSL and compiler, because there is not necessarily any guarantee that the compiler will be maintained in the future, for instance to support new GPUs or to fix bugs. This is especially true when a DSL has been developed by a small research group, as their future can be uncertain.

However when one looks across DSL compilers we see that they share many commonalities between them and so it should be possible to develop a single compiler that can be shared between DSLs. The elephant in the room here is that by doing this we risk developing another DSL compiler which shares the exact same downsides as all the rest of them. Therefore in the xDSL project funded by ExCALIBUR, we have been developing shared DSL compiler infrastructure based upon MLIR.

MLIR is a compiler framework that was origionally developed by Google then merged into mainstream LLVM a few years ago. Comprised of Intermediate Representation (IR) dialects and transformations, different dialects at varying levels of abstraction are provided with lowerings between these to then ultimately target hardware. MLIR has gained significant traction in the compiler community, with buy-in from many organisations, including vendors who have contributed dialects and transformations that are tuned to their architectures. It is also possible to write new dialects and transformations in MLIR.

In xDSL it has been our objective to make MLIR accessible to DSL developers, where they can then concentrate on their DSL abstractions and leverage a shared, common, compiler ecosystem that provides many building blocks. However one of the challenges associated with MLIR, which is written in C++, is that it is quite complex internally and has a steep learning curve. Consequently, as part of xDSL we wrote a Python compiler toolkit which is 1:1 compatible with MLIR. Designed for integration with DSLs, as well as fast prototyping of MLIR concepts before upstreaming these to MLIR itself, they significantly raise productivity when working with MLIR.

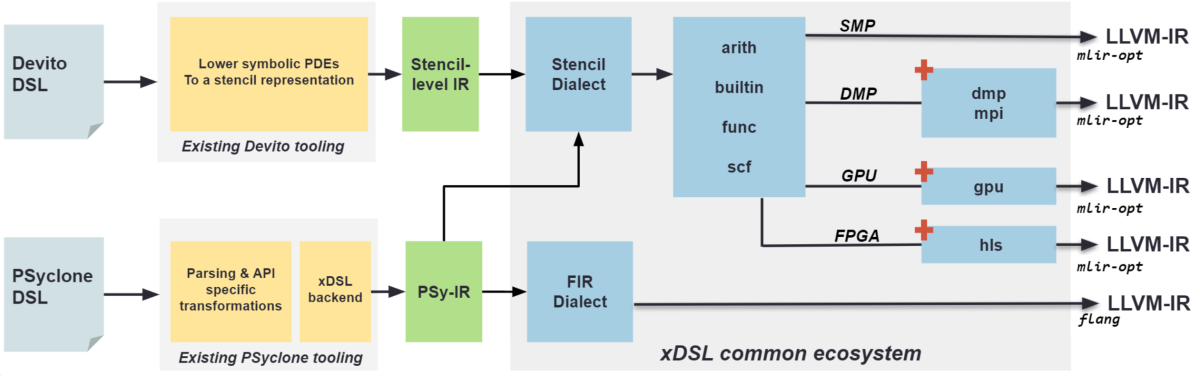

In xDSL we have been focused on two DSLs:

- Devito has been developed by Imperial College and designed for seismic, medical imaging and inverse problems where programmers leverage it via Python.

- PSyclone has been developed by STFC and used by several codes including the Met Office's next generation LFRic weather model, where programmers work with it via Fortran.

While these two DSLs are at first glimpse rather different (Python vs Fortran, and seismic & medical imaging vs climate and weather), at the abstraction level they share the requirement to be able to express stencils effectively.

We therefore developed integration for both Devito and PSyclone on top of MLIR by using xDSL. This meant that these two DSLs are now a thin abstraction layer on top of a shared stack where the stencil dialect (initially developed by ETH Zurich, but tuned significantly in xDSL) is the front-door that these two DSLs target.

From this point onwards, a shared compiler stack then lowers and optimises the code across CPUs, distributed memory parallelism, GPUs, and FPGAs. Ultimately the performance delivered by our approach is fairly comparable - sometimes faster, sometimes a little slower - than the existing bespoke DSL compilers.

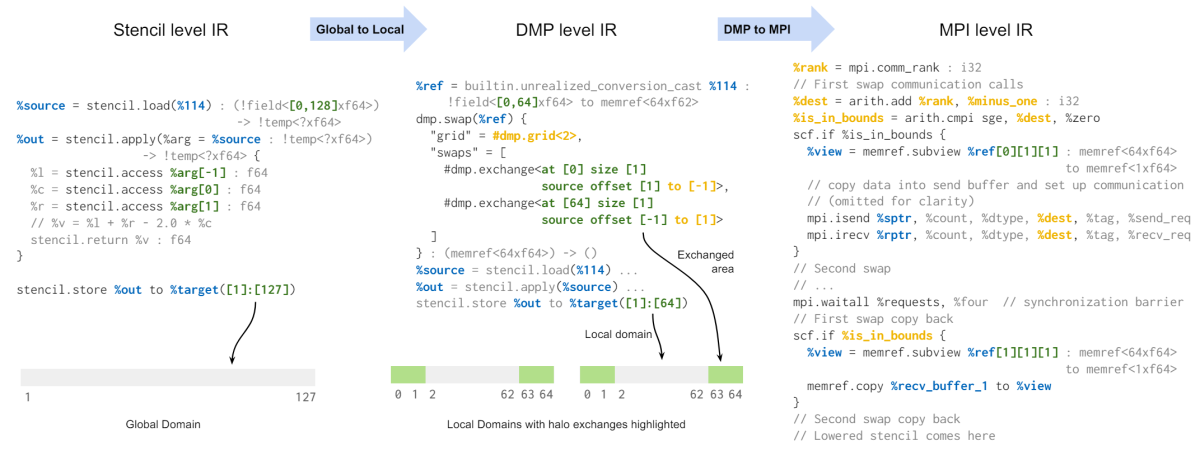

Distributed memory was an interesting case because the building blocks were not supported in MLIR. Consequently we developed and perfected an MPI dialect in xDSL and this has now been partially merged into main-line MLIR. This is an example of using xDSL as a fast prototyping environment to explore and refine concepts before they are made more widely available to the MLIR community.

The FPGA target is also an interesting example, because it is well known that developing optimal codes for FPGAs requires significant expertise and time. By developing a highly efficient lowering from stencils to a High Level Synthesis (HLS) dialect, all DSLs that integrate with our stack now automatically target FPGAs without any changes required to them.

Further information

This work was presented in a paper at the ACM International Conference on Architectural Support for Programming Languages and Operating Systems. To read a more detailed account, please see the paper "A shared compilation stack for distributed-memory parallelism in stencil DSLs" at: https://arxiv.org/pdf/2404.02218

xDSL project: https://xdsl.dev

EPCC is part of the ExCALIBUR programme, a UK research programme that aims to deliver the next generation of high-performance simulation software for the highest-priority fields in UK research. Read more at: https://excalibur.ac.uk